As artificial intelligence evolves, it is no longer limited to just text-based interactions. Enter Multimodal AI—a groundbreaking advancement where AI can process and understand multiple types of data simultaneously, including text, images, audio, and video. This shift is redefining how AI interacts with humans, making machines smarter, more intuitive, and highly adaptable across various industries.

What is Multimodal AI?

Traditional AI models, like chatbots and virtual assistants, typically rely on single-modal input, meaning they process only one type of data at a time (e.g., text-only chatbots).

Multimodal AI, however, can analyze different types of inputs at once. For example:

✔️ A voice assistant can process your speech tone (audio) while analyzing facial expressions (visual data) to determine your mood.

✔️ A healthcare AI can assess a patient’s symptoms (text input) along with medical images (X-rays, MRI scans) for better diagnostics.

✔️ Autonomous vehicles can combine visual data (road signs, pedestrian movement) with audio cues (horns, sirens) to make real-time driving decisions.

By integrating multiple sources of data, AI gains a deeper contextual understanding, leading to more accurate, human-like interactions.

Key Benefits of Multimodal AI

🔍 1. Context Awareness & Smarter AI Responses

Unlike traditional AI, which often misinterprets intent due to lack of context, multimodal AI combines multiple data points to provide more precise and meaningful responses.

Example:

📸 Google Lens can translate text from an image while considering the surrounding visual context, improving translation accuracy.

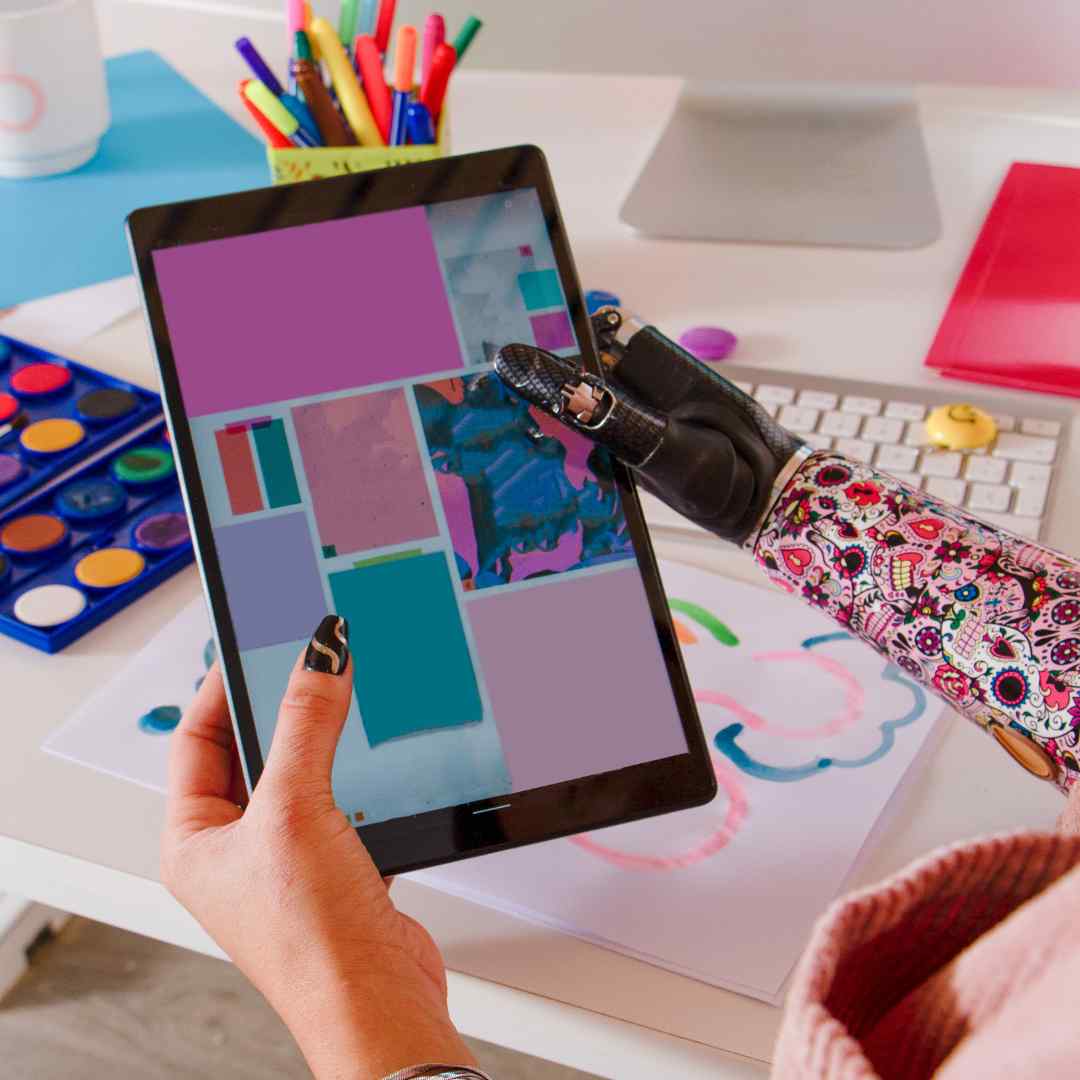

🏥 2. Transforming Healthcare & Medical Diagnostics

Multimodal AI is revolutionizing healthcare by analyzing text-based patient reports, medical imaging, and voice descriptions simultaneously.

Example:

🔬 AI can detect early-stage diseases by combining X-ray scans, patient history, and doctor’s voice notes, ensuring a more comprehensive diagnosis.

🎨 3. AI-Powered Creativity & Content Generation

AI is now capable of creating multimodal content, blending text, images, and videos seamlessly.

Example:

🎥 Generative AI tools like OpenAI’s DALL·E & Google’s Imagen can generate AI-generated videos, artwork, and music, reshaping the future of content creation.

🚗 4. Enhancing Autonomous Vehicles & Robotics

Multimodal AI is critical for self-driving cars and robots, allowing them to process data from cameras, LiDAR sensors, GPS, and audio cues all at once.

Example:

🚦 Self-driving cars use multimodal AI to detect road signs, identify pedestrians, and respond to honking sounds, ensuring safer navigation.

Challenges & Ethical Concerns

While Multimodal AI offers massive potential, it also comes with challenges:

⚠️ Data Privacy Risks – Processing multiple forms of data raises security concerns regarding personal information.

⚠️ AI Bias & Ethical Concerns – Ensuring fair, unbiased decision-making in multimodal systems is still a challenge.

⚠️ High Computational Costs – Multimodal AI requires advanced infrastructure and large-scale data processing, making it expensive to implement.

The Future of Multimodal AI

With AI becoming smarter and more human-like, multimodal AI will play a crucial role in:

🚀 Personalized AI Assistants – AI that sees, hears, and understands users for a more interactive experience.

🏥 AI-Driven Healthcare – Improved medical diagnostics & robotic-assisted surgeries.

🎬 Advanced Content Creation – AI-generated videos, movies, and music compositions.

🚘 Next-Gen Autonomous Systems – Smarter self-driving cars, robots, and IoT devices.

Multimodal AI is not just the future—it’s already here! As technology advances, AI will continue to integrate multiple data sources, making it an indispensable part of our daily lives.

💡 What are your thoughts on Multimodal AI? Could it redefine the way we interact with technology? Let’s discuss in the comments! 👇

#MultimodalAI #FutureOfAI #AIRevolution #SmartTechnology #ArtificialIntelligence